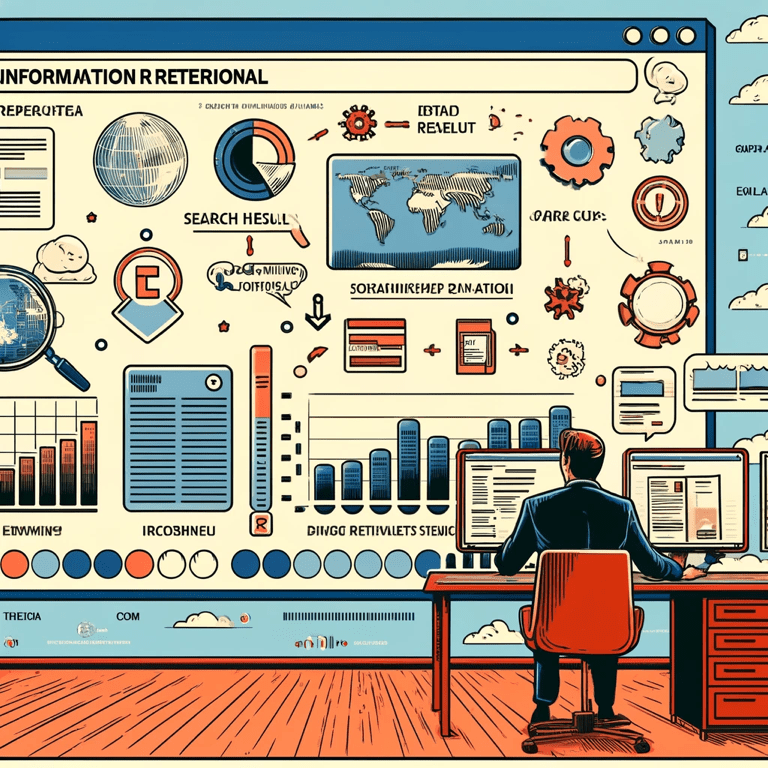

Hey there, data enthusiasts! we’re diving into the fascinating world of Learning to Rank (LTR) methods—a crucial machine learning tool in the realm of information retrieval. Whether you’re a seasoned data scientist or just starting out, understanding LTR can significantly enhance your ability to provide users with the most relevant search results. Let’s break it down!

Introduction to Learning to Rank methods

Definition and importance in information retrieval

Learning to Rank (LTR) is a supervised machine learning technique used to rank a list of documents or items based on their relevance score to a query or user.LTR is crucial in information retrieval and search engines, as it helps to provide users with the most relevant search results.

Understanding Learning to Rank Methods

Pointwise, Pairwise Ranking, and Listwise approaches

Pointwise approach: scores each document independently with a ground truth target value, similar to traditional regression and classification tasks.

Pairwise ranking approach: scores each document based on a given query and considers the ranking position, used to overcome the drawbacks of pointwise ranking.

Listwise approach: takes into account the list of ranked documents, along with their relevance labels, to learn relationships between items in a list.

Machine Learning for Ranking

Training data preparation and optimisation

Training data consists of lists of items with some partial order specified between items in each list.The goal of constructing the ranking model is to rank new, unseen lists in a similar way to rankings in the training data. Optimisation is performed using gradient descent algorithm.

Loss function: the key to ranking performance

The choice of the loss function is the distinctive element for Learning to Rank machine learning models. Examples of loss functions include mean squared error, binary cross entropy loss, and classification loss on pairs of documents.

Choosing the right loss function: MSE, BCEL, and CL Explained

When it comes to machine learning, the choice of loss function can make or break your model’s performance. This is especially true in Learning to Rank methods (LTR), where the goal is to rank items accurately based on their relevance. Let’s dive into three commonly used loss functions—Mean Squared Error (MSE), Binary Cross-Entropy Loss (BCEL), and Classification Loss (CL)—and understand their differences and applications.

Mean Squared Error (MSE)

Definition: Mean Squared Error measures the average squared difference between the predicted values and the actual values. It’s a widely used loss function for regression tasks.

Formula:

where \( y_i \) is the actual value and \( \hat{y}_i \) is the predicted value.

Use Case: MSE is particularly useful when you want your model to predict exact numerical values and is less effective for classification or ranking tasks where the focus is on relative order rather than exact predictions.

Pros:

- Simple and intuitive.

- Penalises larger errors more heavily, which can lead to more precise predictions.

Cons:

- Sensitive to outliers, as large errors disproportionately affect the MSE.

Example: In a regression task predicting house prices, MSE helps ensure the predicted prices are as close as possible to the actual prices.

Binary Cross-Entropy Loss (BCEL)

Definition: Binary Cross-Entropy Loss, also known as Log Loss, measures the performance of a classification model whose output is a probability value between 0 and 1. It’s commonly used for binary classification tasks.

Formula:

where yi is the actual binary label (0 or 1) and y-hat is the predicted probability.

Use Case: BCEL is ideal for binary classification problems, including spam detection, medical diagnoses (e.g., disease vs. no disease), and learning to rank where each item is independently scored.

Pros:

- Provides probability outputs, which can be useful for threshold-based decision making.

- Penalises confident but incorrect predictions more than less confident ones.

Cons:

- Can be sensitive to class imbalance if not handled properly.

Example: In a spam detection system, BCEL helps distinguish between spam and non-spam emails by outputting probabilities.

Classification Loss (CL)

Definition: Classification Loss, often referred to in the context of pairwise ranking as hinge loss or pairwise logistic loss, focuses on the correct ranking order between pairs of items.

Pairwise Logistic Loss Formula:

where si and sj are the scores of items i and j, respectively, and P is the set of item pairs.

Use Case: CL is particularly useful in learning to rank scenarios where the goal is to optimise the order of items based on their relevance, such as search engine results and recommendation systems.

Pros:

- Directly optimises ranking performance by focusing on the relative order of items.

- Reduces the impact of outliers compared to MSE.

Cons:

- Requires careful selection of item pairs, which can be computationally intensive.

Example: In a search engine, CL ensures that more relevant documents are ranked higher than less relevant ones, improving the overall user experience.

Choosing the Right Loss Function

- Use MSE: When your primary goal is to predict precise numerical values and the importance of each prediction is equal.

- Use BCEL: When dealing with binary classification problems and you need probability outputs for further decision-making processes.

- Use CL: When the task involves ranking items, and the primary objective is to get the order right rather than the exact scores.

Each loss function has its strengths and specific use cases. Understanding the nuances of MSE, BCEL, and CL helps you choose the most appropriate one for your particular machine learning problem, leading to better model performance and more accurate predictions.

Approaches to Learning to Rank

Neural network-based and gradient-based methods

RankNet uses Binary cross entropy loss where the model outputs probabilities using logistic function.

RankNet: Example in python

import torch

import torch.nn as nn

import torch.optim as optim

class RankNet(nn.Module):

def __init__(self, input_size):

super(RankNet, self).__init__()

self.linear = nn.Linear(input_size, 1)

self.sigmoid = nn.Sigmoid()

def forward(self, x):

return self.sigmoid(self.linear(x))

# Example usage

input_size = 10

model = RankNet(input_size)

criterion = nn.BCELoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Dummy data: pairs of documents with features and their relevance

x1 = torch.rand((5, input_size))

x2 = torch.rand((5, input_size))

y = torch.tensor([1, 0, 1, 1, 0], dtype=torch.float32) # 1 if x1 is more relevant than x2, else 0

# Training loop

for epoch in range(100):

optimizer.zero_grad()

s1 = model(x1)

s2 = model(x2)

p = s1 / (s1 + s2)

loss = criterion(p, y)

loss.backward()

optimizer.step()

print(f'Trained RankNet Model: {model}')LambdaRank defines the gradients of an implicit loss function so that documents with high rank have much bigger gradients.ListNet proposes a new learning method for optimising the listwise loss function based on the top k probability.

LambdaRank: Example in

Overcoming Challenges in Learning to Rank

Position bias and distributed training

Position bias is a common issue in learning to rank, where user clicks are biased towards higher-ranked results.

XGBoost implements the Unbiased LambdaMART algorithm to debias position-dependent click data. Distributed training is implemented with integration of multiple frameworks, including Dask, Spark, and PySpark.

Evaluating and Refining Ranking Models

Metrics for ranking performance and best practices

Evaluation measures include DCG, NDCG, MAP, MRR, and precision. The goal of constructing the ranking model is to rank new, unseen lists in a similar way to rankings in the training data.

Selecting and designing good features is an important area in machine learning, which is called feature engineering.

### Understanding Evaluation Measures: DCG, NDCG, MAP, MRR, and Precision

Evaluating the performance of ranking models is crucial to ensure they meet the desired objectives. Different evaluation measures serve different purposes and provide various insights into how well your model ranks items. Let’s break down five commonly used evaluation measures: Discounted Cumulative Gain (DCG), Normalized DCG (NDCG), Mean Average Precision (MAP), Mean Reciprocal Rank (MRR), and Precision.

Discounted Cumulative Gain (DCG)

Definition: DCG is a measure of ranking quality that evaluates the relevance of a document based on its position in the result list. The gain is accumulated from the top of the list to the bottom, with the gain of each result discounted at lower ranks.

Formula:

where rel-i is the relevance score of the result at position i.

Use Case: DCG is useful for evaluating the relevance of documents retrieved by search engines, where higher-ranked documents are more important.

Pros:

- Takes into account the position of relevant documents.

- Higher relevance scores at the top of the list have a larger impact.

Cons:

- Raw DCG scores can be difficult to interpret without normalization.

Normalized Discounted Cumulative Gain (NDCG)

Definition: NDCG normalizes the DCG score, making it easier to compare across different queries with varying numbers of results and relevance scales. NDCG is typically used to evaluate the performance of a search engine or recommendation system.

Formula:

where IDCG is the ideal DCG, which is the maximum possible DCG score for a perfect ranking.

Use Case: NDCG is ideal for comparing the effectiveness of different ranking algorithms on the same dataset.

Pros:

- Provides a normalized score between 0 and 1, facilitating easier comparison.

- Reflects the quality of the ranking across different queries.

Cons:

- Requires calculation of the ideal ranking (IDCG).

Mean Average Precision (MAP)

Definition: MAP calculates the mean of the average precision scores for each query. Average Precision (AP) is the average of precision values at the ranks where relevant documents appear.

Formula:

where Q is the number of queries and \( \text{AP}(q) \) is the average precision for query \( q \).

Use Case: MAP is commonly used in information retrieval tasks to evaluate the overall precision of a ranking system across multiple queries.

Pros:

- Combines precision across all relevant documents.

- Provides a single score to evaluate the performance across multiple queries.

Cons:

- Sensitive to the number of relevant documents per query.

Mean Reciprocal Rank (MRR)

Definition: MRR measures the average of the reciprocal ranks of the first relevant document for a set of queries. It focuses on how early the first relevant document appears in the ranking.

Formula:

where rankq is the rank position of the first relevant document for query q.

Use Case: MRR is useful when the primary goal is to find the first relevant result quickly.

Pros:

- Simple and intuitive.

- Highlights the importance of early relevant documents.

Cons:

- Only considers the first relevant document, ignoring the rest.

Precision

Definition: Precision measures the proportion of relevant documents among the retrieved documents. It’s a fundamental measure in information retrieval.

Formula:

Use Case: Precision is essential when the focus is on the accuracy of the retrieved documents rather than their ranking.

Pros:

- Easy to understand and compute.

- Directly measures retrieval accuracy.

Cons:

- Does not account for the position of relevant documents.

- Can be misleading if used alone, as it ignores recall (the proportion of relevant documents that are retrieved).

Summary

- DCG: Measures ranking quality considering the position of relevant documents.

- NDCG: Normalised version of DCG for easier comparison.

- MAP: Mean of average precision scores across multiple queries, combining precision across all relevant documents.

- MRR: Focuses on the rank of the first relevant document.

- Precision: Proportion of relevant documents among retrieved documents.

Each evaluation measure provides unique insights, and choosing the right one depends on the specific objectives and requirements of your application. Combining these metrics can offer a more comprehensive evaluation of your ranking system’s effectiveness.

Recommendations

If you are looking for other machine learning articles, you might also find this one interesting:

Let’s Connect

I’d love to hear your thoughts on this fascinating topic!

Feel free to connect with me on Twitter at https://twitter.com/feddernico or explore more of my content on Medium https://medium.com/@federico.viscioletti and Substack https://feddernico.substack.com

Happy learning!