As a data scientist, I’ve faced many challenges throughout the years. While working on various projects, I’ve learned some key strategies through trial and error. They have greatly improved my workflow and the effectiveness of the solutions. Learning these lessons involved a fair share of pain. So, if you are a junior data scientist entering the field, I’m excited to share these real-world data science strategies with you.

In this post, I’ll share the top three lessons I’ve learned from my experience in data science and development. By the end, you’ll know how to avoid common mistakes. You’ll also learn best practices that will improve your skills as a data scientist.

Lesson 1: Always Test Your Python Script on a Smaller Sample

You might feel the urge to run your Python scripts on whole datasets right away. Yet, this approach can cause big issues. It leads to long run times and heavy resource use (aka lots of miserable evenings).

Instead, take action by testing your scripts on a smaller sample of the data first. Take this step. It can help you find and fix errors fast. You won’t waste time or computing power (YAY).

Real-World Data Science Strategies

For instance, when working on a large dataset to predict vessel behavior, I first test my code on a small part of the data. I do this by selecting a representative vessel type or by sampling the vessel IDs. This helps me catch a critical logic error that would have otherwise taken hours to identify. By the time you need to run the script on the full dataset, it works flawlessly, saving me both time and resources.

Testing on a smaller sample is crucial because it allows you to debug and optimise your code before scaling up.

Now, let’s move on to the next lesson.

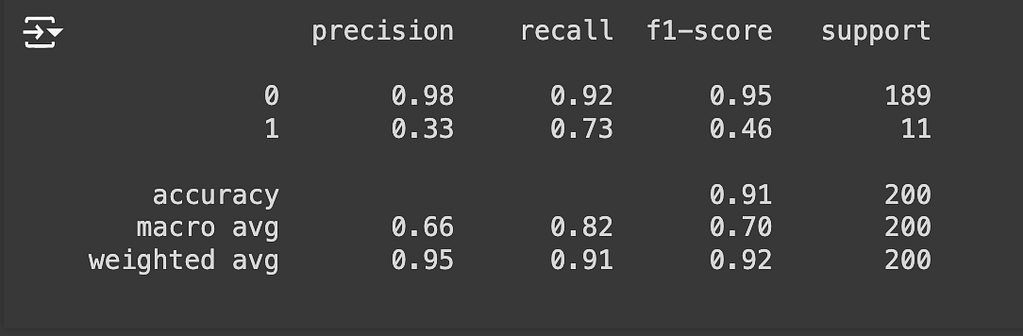

Lesson 2: Don’t Use SMOTE or Any Other Over/Under Sampling Techniques in Production

Many data scientists believe that SMOTE can fix imbalanced data. (SPOILER ALERT: I was one of them). These techniques can boost model performance in development. But then, they often fail in production.

Many see these methods as quick fixes. But they can cause new issues when used in real-world scenarios. It’s important to see the limits of these techniques and focus on sustainable solutions.

Common misconceptions about sampling techniques:

- They provide a solution for data imbalance.

- Maintain the integrity of the original dataset.

- Are as effective in production as they are in development.

But the brutal truth is:

- They can distort the true distribution of the data.

- May not generalise well to new, unseen data.

- Can lead to overfitting, which reduces the model’s performance in production.

The key to success in this case is doing nothing to the data structure and using robust algorithms that handle imbalance naturally.

For example, when working on supervised classification problems, I often use LightGBM. I choose it for its fast training and prediction. This model has a parameter called is_unbalanced, which can be set to True if the target variable is unbalanced.

Here’s a quick example to test:

# Real-World Data Science Strategies - 2:

# Don't Use SMOTE or Any Other Over/Under Sampling Techniques in Production

import lightgbm as lgb

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

import numpy as np

# Generate a synthetic imbalanced dataset

from sklearn.datasets import make_classification

X, y = make_classification(n_samples=1000, n_features=20, n_classes=2, weights=[0.9, 0.1], flip_y=0, random_state=1)

# Split the dataset into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create a LightGBM dataset with weight parameter

d_train = lgb.Dataset(X_train, label=y_train, weight=np.where(y_train == 1, 10, 1)) # Increase weight for the minority class

# Set LightGBM parameters

params = {

'objective': 'binary',

'boosting_type': 'gbdt',

'metric': 'binary_logloss',

'is_unbalance': True, # Set this to True if not using manual weights

'boost_from_average': True,

'learning_rate': 0.05,

'num_leaves': 31,

'feature_fraction': 0.9,

'bagging_fraction': 0.8,

'bagging_freq': 5,

'verbose': -1

}

# Train the model

bst = lgb.train(params, d_train, num_boost_round=100)

# Predict on the test set

y_pred = bst.predict(X_test)

y_pred_binary = [1 if pred > 0.5 else 0 for pred in y_pred]

# Evaluate the model

print(classification_report(y_test, y_pred_binary))

While it’s tempting to rely on under/over sampling techniques, it’s essential to acknowledge their limitations and focus on more robust solutions. Now, let’s discuss the final lesson.

Real-World Data Science Strategies 3: Avoid Overcomplicating Your Solutions

A common mistake among data scientists is overcomplicating their solutions. This often involves using overly complex models or unnecessary features. This, in turn, can hinder progress and make the system harder to maintain.

Overcomplicating your solution can lead to more computation time. It also causes trouble in debugging, maintaining, and updating the model. Instead, focus on simplicity and clarity in your approach.

For example, in one of my early projects, I used a complex model. It had many features to predict a binary target variable. While it worked well during development, it was challenging to deploy and maintain. Later, I simplified the model, using a simpler logistic regression approach. It performed nearly as well and was much easier to manage and update.

This taught me the importance of simplicity and clarity. Simple solutions are easier to understand and maintain. They often work better in the long run than complex ones.

The goal is to make models that are not only effective but also practical for the real world.

To summarise:

- Always test your Python scripts on smaller samples to save time and resources.

- Avoid using SMOTE in production. It often fails to generalize well.

- Keep your solutions simple and maintainable to ensure long-term success.

These are some of the real-world data science strategies that have been important in my journey as a data scientist. Adding them to your practice will prepare you to face real challenges, and will help you provide impactful solutions.

If you like advanced data science techniques you will probably love this other article I wrote about encoding categorical variables.

Or if you want you can follow me on Twitter/X